Detail Requirements

- The upper bound of computational complexity is 1 GFLOPs.

- The upper bound of model size is 20MB.

- We provide the bounding boxes obtained by our detector for training/validation/test sets. The participants are also allowed to employ their own face detectors.

- Except for the face detectors, any external datasets and models are not allowed. Any test augmentation and multi-model ensemble strategy are not allowed, either.

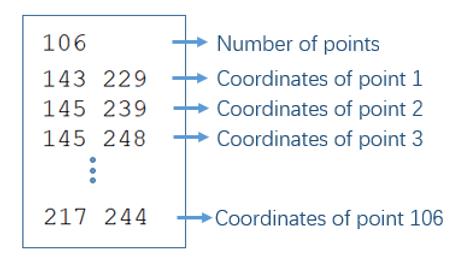

- During the validation phase, each team could send a compressed file (.zip/.tar/.tar.gz), consisting of 2,000 landmark files (.txt) of the validation set, to FLLC_ICPR2020@163.com. We will return the performance to the participants and update the leaderboard. Each file (.txt) should contain the number of key points along with the predicted coordinates for each point (with integer format). An example is shown in Fig. 2. The coordinates of the top left corner is [0, 0], where the first number refers to horizontal coordinate and the second number refers to vertical coordinate. If multiple faces are detected by the face detector, please just choose the one which has the biggest IOU with the provided bounding box as the output. Note that each team can only submit once per day.

- The test images will be released on Oct. 9, 2020 [00:00 a.m. UTC+1], and each team will have 24 hours to predict the test results. Both the predicted test results (the same format as the validation results) and the training materials (codes, models and technical reports) need to be sent to us before Oct. 10, 2020 [00:00 a.m. UTC+1]. We will re-implement the method to verify the correctness. Any team whose results we cannot reproduce or whose computational complexity or model size cannot satisfy the requirements will be disqualified.

Fig. 2 Example of the landmark file.

Evaluation Criterion

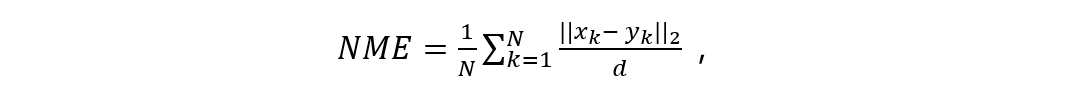

Submissions will be evaluated with the Area-Under-the-Curve (AUC) from the Cumulative Errors Distribution (CED) curves. Besides, further statistics from the CED curves such as the failure rate and average Normalized Mean Error (NME) will also be returned to the participants for inclusion in their papers. The CED curve corresponding to the percentage of test images of which the error is less than a threshold alpha will be produced. The AUC is the area under the CED curve calculated up to the threshold alpha, then divided by that threshold. We set the value of alpha to be 0.08. Similarly, we regard each image with a Normalized Mean Error (NME) larger than alpha as failure. NME is computed as:

where “x” denotes the ground truth landmarks for a given face, “y” denotes the corresponding prediction and “d” is computed by d = sqrt(width_bbox * height_bbox). Here, “width_bbox” and “height_bbox” are the width and height of the enclosing rectangle of the ground truth landmarks, respectively.